12.2.2021

Nowadays, we all hear about the impact of Artificial Intelligence (AI) in various fields and applications.

Of course, when it comes to problem solving, the nature has the best solutions already. In other words, we are trying to interpret the natural solutions and replicate them in our problems.

Furthermore, the solutions are valid in an agreed perception/domain. In fact, the key features representing our perception might be different by changing the perception domain. Accordingly, for a robot, various sensor data give different feature space representing the robotic perception. So, there are multiple sensors in our Micro-Robotic platform to extract key features from different domains to expand the machine perception.

My research project is about Automation of Micro-robotic Platform using AI for increasing the throughput of mechanical properties characterization of fibrous materials. The project parts are developing the existing hardware and a user-friendly software interface, collecting data from sensors, feedback-controlled movements, problem-depended data processing/analysis, and using machine/deep learning methods for failure prediction, anomaly detection, object detection/recognition and automated series of actions using reinforcement learning.

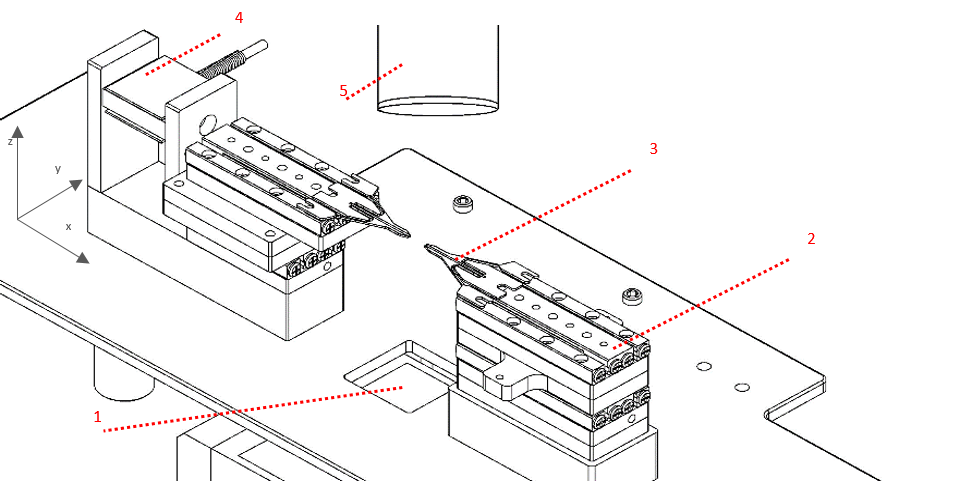

Our micro-robotic platform consists of 1) light source to illuminate the sample plane, 2) micro-actuators to move with micron steps, 3) micro-grippers to grasp the fibers, 4) force sensor along with fiber axis and 5) optical lens tubes and camera for microscopy imaging.

The feature space in our platform is constituted by data from micro-positioners (2), force sensor (4) and imaging camera (5). The data are numerical values related to positions and force load, and image data; so, the feature space is multimodal (i.e. different data types representing same context).

Artificial Intelligence (AI) used in our solutions are based on different models such as conventional signal processing on position and force data for Anomaly Detection, conventional computer vision algorithms on image data for Object Detection and Feature Extraction, and Machine Learning (ML) models on numerical and image data for Regression, Prediction, Feature Extraction, Object Detection/Recognition and Image Segmentation.

Machine Learning (ML) models in our project could be from a wide range of methods; from classical ML methods such as K-Nearest Neighbors (KNN), Support Vector Machines (SVM) Decision Trees (DT) to Deep Neural Networks (DNN) on numerical data for Regression, Classification and Prediction. Furthermore, for Spatial Feature Extraction, Object Detection/Recognition and Image Segmentation, Convolutional Neural Networks (CNN) as a well-known method in Deep Learning (DL) is used on the microscopy images from the top-view camera. So, for each type of data and specific problem, we need to feed a suitable model with the data pre-processed properly.

In the end, the problem has translated to understanding the robot perception, data types and existing ML/DL methods. Finally, it’s important to note that a well-trained AI is an analogy for a well-evolved Natural Intelligence (NI).

Writer:

Ali Zarei

ESR2 - Tampere University